1. **Review your program's current performance indicators. How many directly link to outcomes in a PLM structure?** If indicators measure outputs or activities rather than outcomes, what does that reveal about program logic clarity?

2. **Think about a program that consistently underperforms despite adequate resources. Could the issue be flawed assumptions in the causal logic?** Which assumptions would you test first, and how?

3. **Examine a recent program evaluation that concluded "the program didn't work." Was there an explicit PLM showing the assumed causal mechanism?** Without PLM, how can evaluators know whether program theory was sound but implementation poor, or theory itself was flawed?

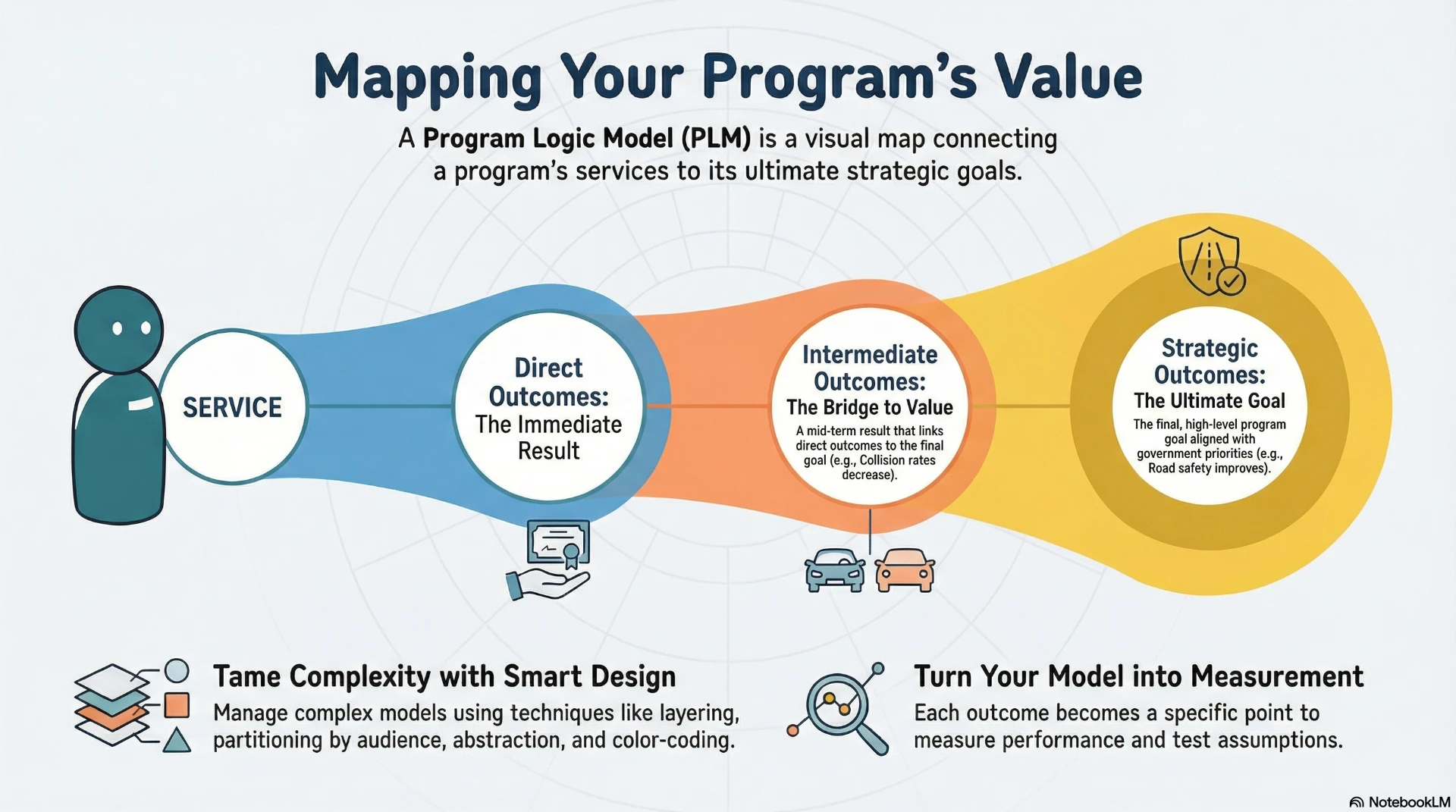

4. **Consider a complex multi-service program in your organization. If you built a complete PLM, would it be readable, or would it require complexity management?** Which strategy (layering, partitioning, abstraction, color coding) would be most effective for your context?

5. **Think about how performance data flows in your organization. Does it enable testing of causal assumptions, or just track activities?** If you can't connect performance data to outcome logic, what strategic questions remain unanswerable?